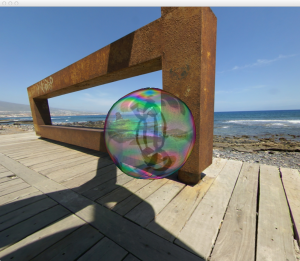

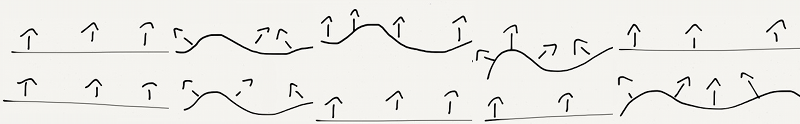

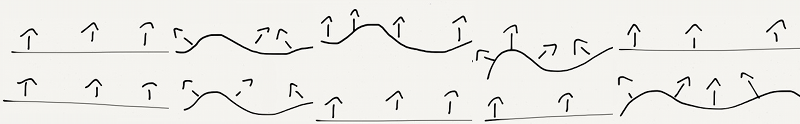

My GLSL spectral rendering shader (here & here) is now able to do some (environmental) bump-mapping. It does physical wavelength-based thinfilm interference with sampled SPD‘s on the GPU in realtime. All parameters are adjustable at runtime. I’ve implemented five distinct “film-setups” that have different ways to interpret/change film thickness and normals of film and object surface. The setups are outlined in this sketch:

Here’s a textual description:

- A simple film with constant thickness covers the object.

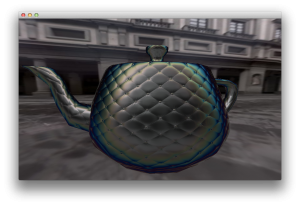

- The surface of the object is “bumped” and the film has a constant thickness.

- Both object and film use regular normals, but the thickness of the film varies. This is fake but looks nice:D.

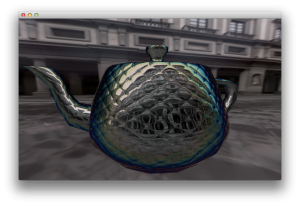

- The object remains unchanged while the film (thickness + normal) is bumped. Basically 3, but correct.

- The object is bumped and the thickness is modulated such that the film’s surface is “flat”(like setup 1). The inverse scenario of 4.

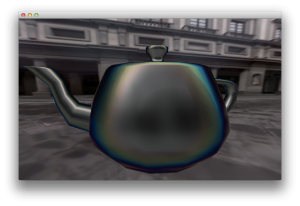

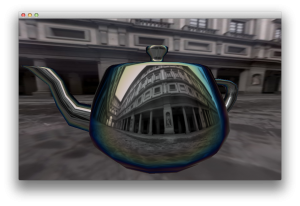

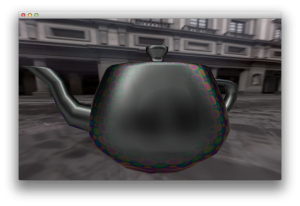

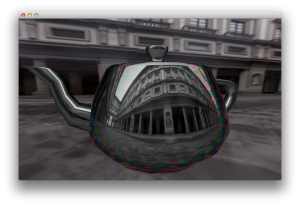

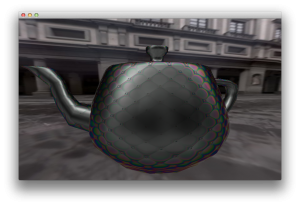

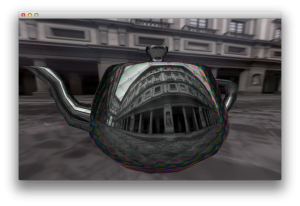

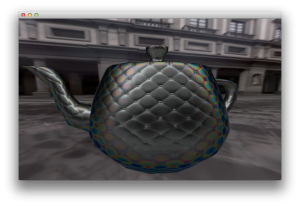

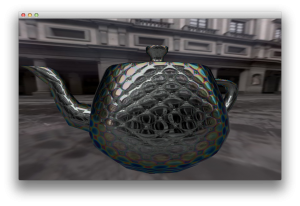

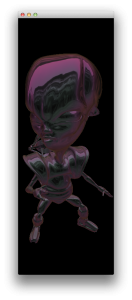

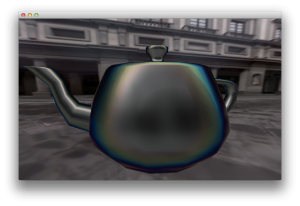

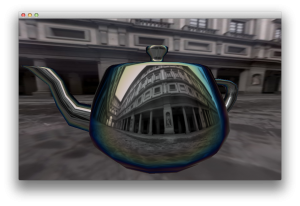

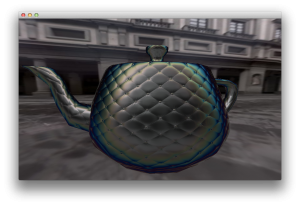

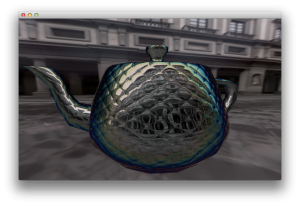

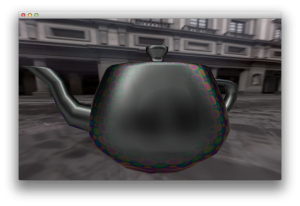

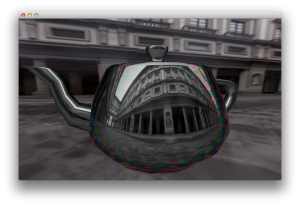

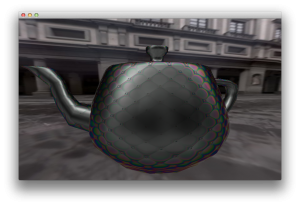

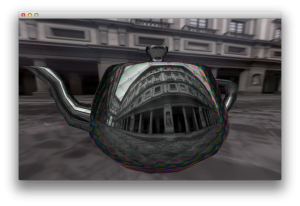

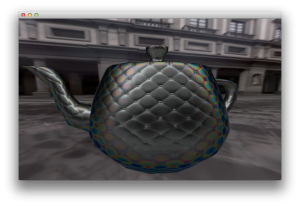

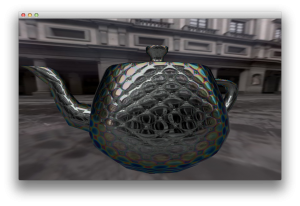

Finally some screenshots from the same angle and same film settings: refraction index=1.09, tilm-thickness:550-1170nm, CIE D65 light, silver material. The matte utah teapot is shown on the left, the shiny teapot on the right:

(Click on image to enlarge)

I hope to be able to record a demo video soon (~ this week). While going through the sourcecode I also noticed that there’s no dispersion as the refraction index is const. This should be easily adjustable, I just need some data.

Also, while the uffizi gallery is nice I’d like to try out some other cubemaps (m.be. from Humus) and some other models. Maybe a wobbling soapbubble?